MIXED METHODS AND CROSS-CUTTING APPROACHES

16 Mixed methods

Pierre Pluye

Abstract

Mixed methods refer to the integration of qualitative and quantitative methods in an evaluation or research project. The approach involves thinking about this integration at all stages of the project, from the formulation of the research questions to the literature review and data analysis. Mixed methods can make a greater descriptive, explanatory or predictive contribution than either qualitative or quantitative methods taken separately.

Keywords: Mixed methods, integration, sequential exploratory design, sequential explanatory design, convergent design, mixed methods literature review

I. What do these methods consist of?

Any programme can be evaluated by combining the power of words (sounds and images) with the power of numbers (Pluye and Hong 2014). For example, you can collect stories from stakeholders and users that illustrate successes or failures from which practical lessons (rooted in stakeholders’ experience) can be drawn to improve an intervention; in addition, you can collect available statistics on that intervention, or plan to collect them in a cross-sectional way (e.g., with a survey) or longitudinally (e.g., with routine data collection inserted into daily activities). The integration of stories and statistics is a powerful way to address complex policy challenges and questions. In the following sections, the mixed methods approach is presented along the different stages of the research.

Clearly formulating specific questions

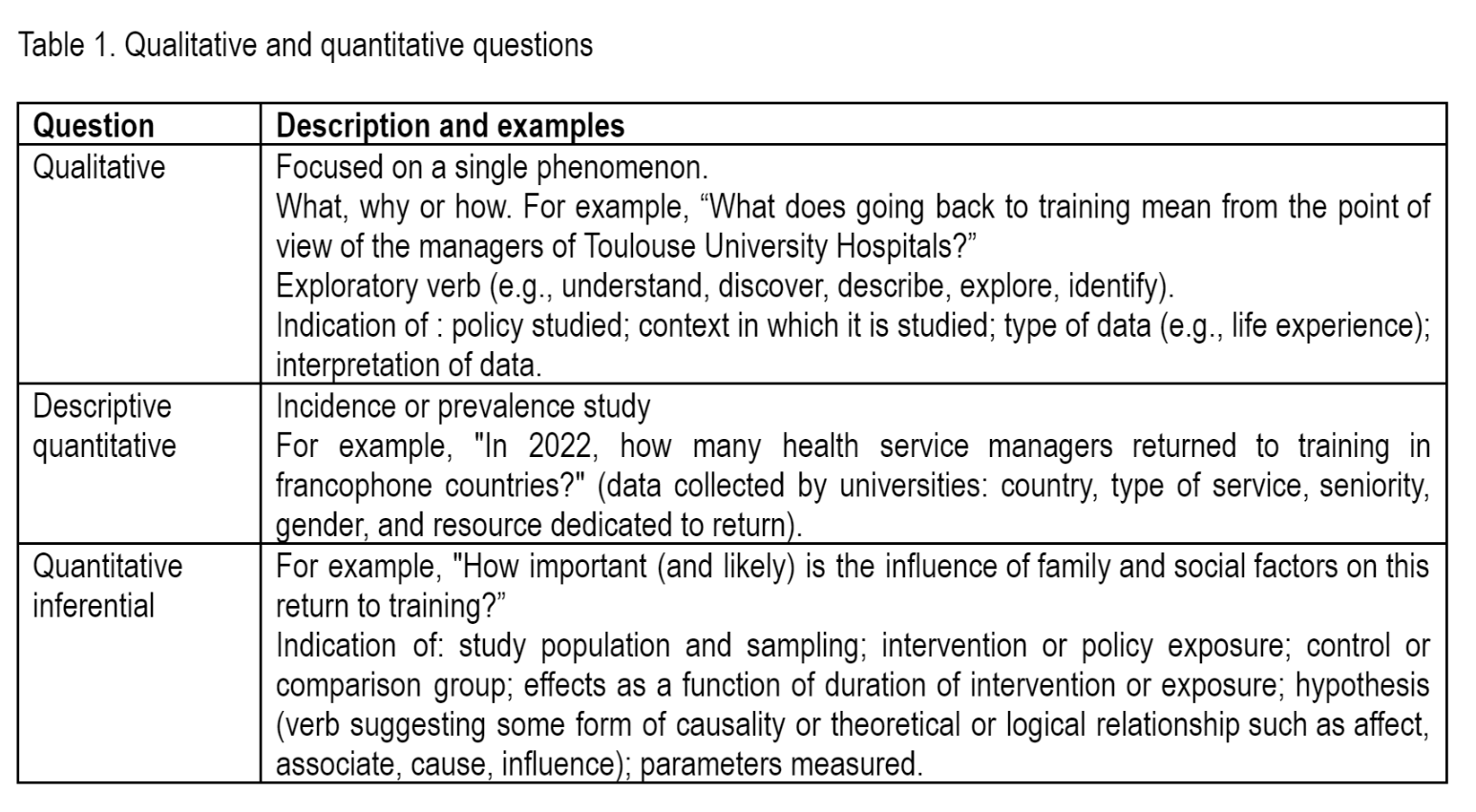

Mixed methods allow you to answer interdependent (e.g., sequential) or complementary (e.g., convergent) qualitative and quantitative evaluation or research questions about a public policy. For example, you may formulate a general mixed methods objective combining exploration and measurement, and then specific qualitative and quantitative questions (see Table 1). Any question should be clearly formulated. It should express a single idea (an interrogative sentence). Evaluation and research questions usually arise from problems and challenges encountered in the creation, development, implementation (e.g., adaptation to the context) and sustainability (e.g., adjustment to changes in the context) of public policies. They are imposed by management or suggested by stakeholders and users.

Conducting a mixed studies review (mixed methods literature review)

Any assessment or research is guided by existing knowledge. This knowledge comes from experts, grey literature (e.g., reports from public organisations that can be identified with Google Scholar or OpenAlex) and publications indexed in bibliographic databases such as Cairn, Érudit, Scopus, etc. The help of a librarian is invaluable. Start by conducting a review of published literature in the form of scientific articles, book chapters or theses. Identify the most relevant documents (those that answer your questions) and plan a knowledge update. Use a document management software to keep track of the process and to make it easier to write the “Introduction” and “Discussion” sections of your report (e.g., the free software Zotero).

To update knowledge, mixed studies reviews combine quantitative, qualitative and/or mixed studies. They are becoming increasingly popular as they allow qualitative and quantitative questions to be answered by taking advantage of the complementarity of knowledge derived from qualitative, quantitative and mixed methods studies. When a public policy and its effects are well known, they can provide a thorough and comprehensive understanding of the policy in several contexts. The vast majority of literature reviews are not systematic (these being expensive and time-consuming), but mixed studies reviews can be systematic, like any other type of review.

Choosing a mixed methods research design

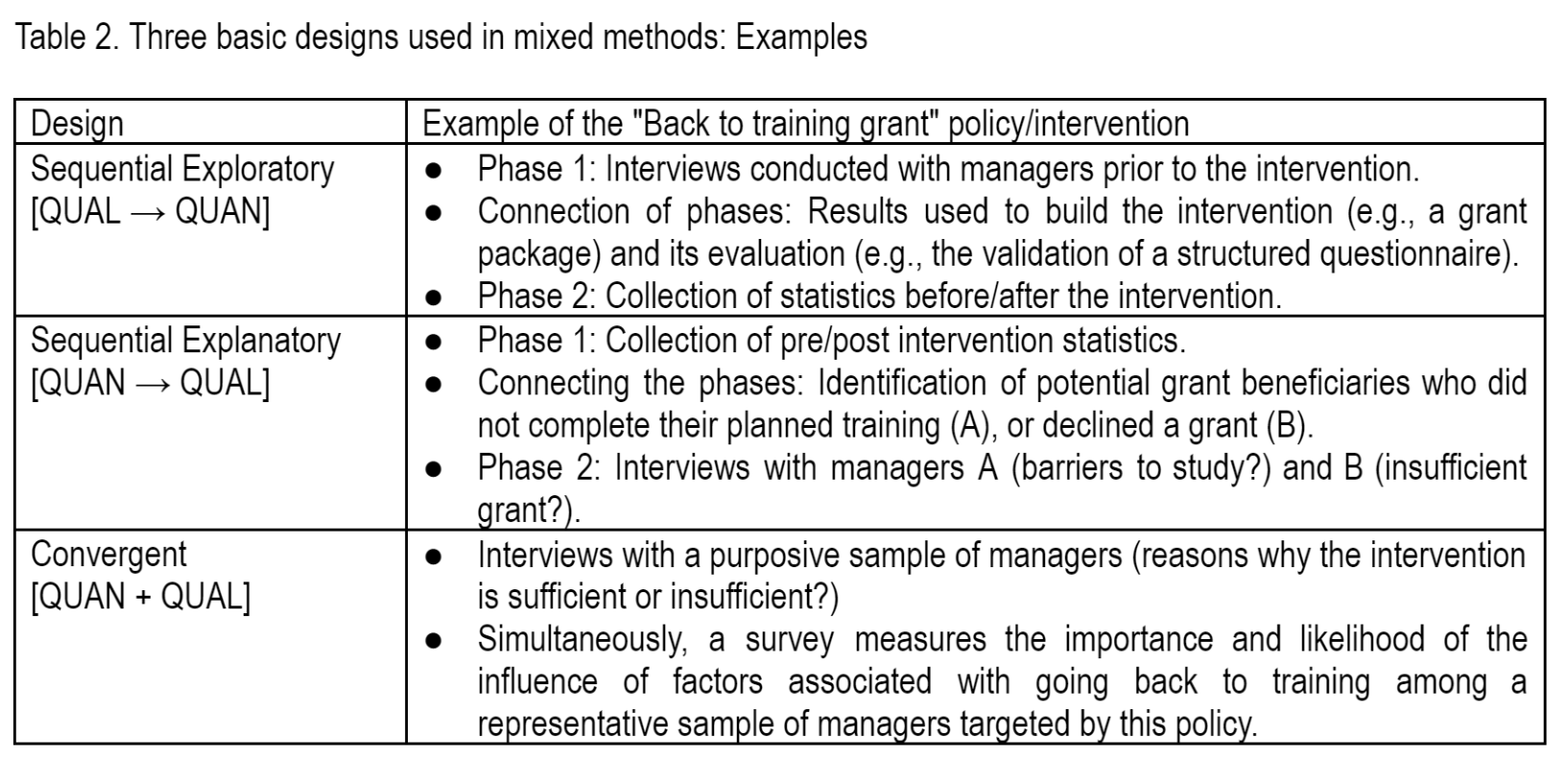

Usually, evaluation and mixed methods research is based on three basic designs: sequential exploratory, sequential explanatory, and convergent design (See Table 2).

The sequential exploratory design [QUAL → QUAN] begins with qualitative data collection and analysis (QUAL). In this design, the results of qualitative phase 1 inform the data collection and analysis of quantitative phase 2 (QUAN). Phase 2 is thus based on a qualitative understanding of the participants’ perspective. This design involves first exploring the phenomenon of interest qualitatively, and then using the qualitative results to guide the sampling and construction of the subsequent quantitative data collection tool (integration).

In the sequential explanatory design [QUAN → QUAL], quantitative data collection and analysis (phase 1) precedes and informs qualitative data collection (phase 2). This design involves an initial quantitative assessment followed by a qualitative exploration of these results, so that the qualitative results contribute to the explanation of unexpected or extreme quantitative results for instance (integration).

The convergent design [QUAN + QUAL] is the most frequently used. It combines qualitative and quantitative methods in an independent and complementary way. In other words, the collection and analysis of qualitative and quantitative data are not dependent on each other. They may or may not be conducted simultaneously. Indeed, it is rare to have enough resources to do everything at once. Convergence (integration) occurs when the qualitative and quantitative results are interpreted. This involves the collection of both qualitative and quantitative data to answer a similar question formulated in a qualitative and a quantitative way.

Data collection and analysis

Data collection and analysis should take into account the available data sources and the specific techniques, qualitative or quantitative, needed to analyse them. Some procedures may be mixed, for example the Delphi technique (combining interviews and questionnaires with a medium-sized sample including experts from around the world). As many statistical and qualitative analysis procedures and techniques can be used, this brief focuses on the integration of qualitative and quantitative methods.

Integration strategies

Plan any relevant combination of strategies to integrate qualitative and quantitative phases (connection), results (comparison) and data (assimilation). Based on a methodological review, we have identified three types of integration and nine operational strategies (three per type of integration) for successfully integrating qualitative and quantitative methods into mixed methods. Furthermore, we identified all possible combinations of these strategies (Pluye et al. 2018). These combinations have been confirmed in the literature on primary care, nursing, and education, environmental and information sciences. To take this further, specific integration techniques are described in a manual (Fetters 2020).

II. How are these methods useful for policy evaluation?

Mixed methods have been developed in several fields since the 1970s. They formalise procedures and techniques to integrate qualitative and quantitative methods in evaluation and research (Pluye et al. 2019). In this way, they provide a greater understanding than the sum of the knowledge obtained separately with qualitative and quantitative methods. For example, they can answer statistical questions about the effects and costs of interventions, and qualitative questions about the processes behind them, and the experiences and perspectives of stakeholders.

III. An example of the use of mixed methods in the health sector

A governmental Health Technology Assessment (HTA) agency produces and disseminates recommendations (e.g., guidelines on the optimal use of medicines and standards on the management of social services) nationally via professional associations, social services and health services. The agency’s management implements evaluative research to justify the sustainability of this intervention (accountability). For each recommendation available on the agency’s website, a validated questionnaire (Granikov et al. 2020) allows users to assess its relevance, cognitive impact, for example learning, and intention to use it. Over a 2-year period, more than 6000 responses were submitted and analysed (descriptive statistics). In addition, interviews were conducted with 15 users to identify the health-related effects of using the recommendations (thematic analysis). The integration of statistics and themes allows the estimation of the impact of the intervention (use and effects), and the addition of expected types of effects in the questionnaire.

IV. What are the criteria for judging the quality of and reporting mixed methods?

Mixed methods must meet three necessary conditions or essential characteristics: (a) at least one qualitative and one quantitative method are integrated; (b) each method is used in a rigorous manner with respect to the criteria generally accepted in the methodology or research tradition relied upon; and (c) the integration of the methods is accomplished at a minimum through the use of evaluation or research questions, a design, and a strategy for integrating qualitative and quantitative phases, results or data. There are a number of tools that can be used to assess the quality of mixed methods by applying these principles. Their list is updated on the catevaluation.ca website. The most popular validated tool is available free of charge on the Internet (Hong et al. 2018): it includes a checklist, a user manual and answers to frequently asked questions (mixedmethodsappraisaltoolpublic.pbworks.com).

In addition, there are many guides and manuals that facilitate the writing of an evaluation report or scientific publication using mixed methods (Creswell and Plano Clark 2018). Their list is updated on the equator-network.org website. The GRAMMS (“Good Reporting of a Mixed Methods Study”) recommendations list six essential elements to be included in a document based on mixed methods (O’Cathain, Murphy, and Nicholl 2008): (a) justify the use of these methods in relation to the research questions; (b) indicate the design (sequential or convergent) of the use of mixed methods; (c) detail the qualitative and quantitative methods used; (d) specify when, how, and by whom the integration of the methods used was carried out; (e) present the limitations of the methods; and (f) indicate what the different methods contributed, as well as the complementary contribution of their integration.

V. What are the strengths and limitations of mixed methods compared to other methods?

The advantages of mixed methods lie in the synergy between qualitative and quantitative methods. The integration of these methods adds value to the methods taken separately (Fetters and Freshwater 2015). Furthermore, mixed methods entail additional work to collect and analyse both words (sounds and images) and statistics, and to integrate qualitative and quantitative data and results. Their mobilisation can therefore be more time-consuming than a single method, and requires a multidisciplinary team with at least one expert for each of the selected methods. Finally, they require more space in a publication.

Some bibliographical references to go further

Creswell, John. et Vicki, Plano Clark. 2018. Designing and conducting mixed methods research. 3rd éd. Thousand Oaks: SAGE.

Fetters, Michael. 2020. The mixed methods research workbook: Activities for designing, implementing, and publishing projects. Thousand Oaks: SAGE.

Fetters, Michael. and Freshwater, Dawn. 2015. “The 1+ 1= 3 integration challenge”. Journal of Mixed Methods Research, 9(2): 115-17.

Granikov, Vera. and Grad, Roland. and El Sherif, Reem. and Shulha, Michael. and Chaput, Genevieve. and Doray, Genevieve. and Lagarde, François. and Rochette, Annie. and Tang, David Li. and Pluye, Pierre. 2020. “The Information Assessment Method: Over 15 years of research evaluating the value of health information.” Education for Information, 36(1): 7-18.

Hong, Quan Nha. and Fàbregues, Sergi. and Bartlett, Gillian. and Boardman, Felicity. and Cargo, Margaret. and Dagenais, Pierre. and Gagnon, Marie-Pierre. and Griffiths, Frances. and Nicolau, Belinda. and O’Cathain, Alicia. 2018. “The Mixed Methods Appraisal Tool (MMAT) version 2018 for information professionals and researchers.” Education for Information, 34(4): 285-91.

O’Cathain, Alicia. and Murphy, Elizabeth. and Nicholl, Jon. 2008. “The quality of mixed methods studies in health services research.” Journal of Health Services Research and Policy, 13(2): 92-98.

Pluye, Pierre. and Bengoechea, Enrique García. and Granikov, Vera. and Kaur, Navdeep. and Tang, David Li. 2018. “Tout un monde de possibilités en méthodes mixtes: revue des combinaisons des stratégies utilisées pour intégrer les phases, résultats et données qualitatifs et quantitatifs en méthodes mixtes.” In: Oser les défis des méthodes mixtes en sciences sociales et sciences de la santé, ed. by Bujold, Mathieu. and Hong, Quan Nha. and Ridde, Valéry. and Bourque, Claude Julie. and Dogba, Maman Joyce. and Vedel, Isabelle. and Pluye, Pierre. 28-48. Montréal: Association francophone pour le savoir.

Pluye, Pierre. and Bengoechea, Enrique García. and Tang, David Li. and Granikov, Vera. 2019. “La pratique de l’intégration en méthodes mixtes.” In: Évaluation des interventions de santé mondiale: méthodes avancées, ed. by Ridde, Valéry, and Christian Dagenais, 213-38. Québec: Éditions science et bien commun.

Pluye, Pierre. and Hong, Quan Nha. 2014. “Combining the power of stories and the power of numbers: Mixed methods research and mixed studies reviews.” Annual Review of Public Health, 35: 29-45.